Markov Chain Process

- By Carlos Polanco1

-

View Affiliations Hide Affiliations1 Department of Electromechanical Instrumentation, Instituto Nacional de Cardiología Ignacio Chávez, México | Faculty of Sciences, Universidad Nacional Autónoma de México, México

- Source: Markov Chain Process: Theory and Cases , pp 28-36

- Publication Date: June 2023

- Language: English

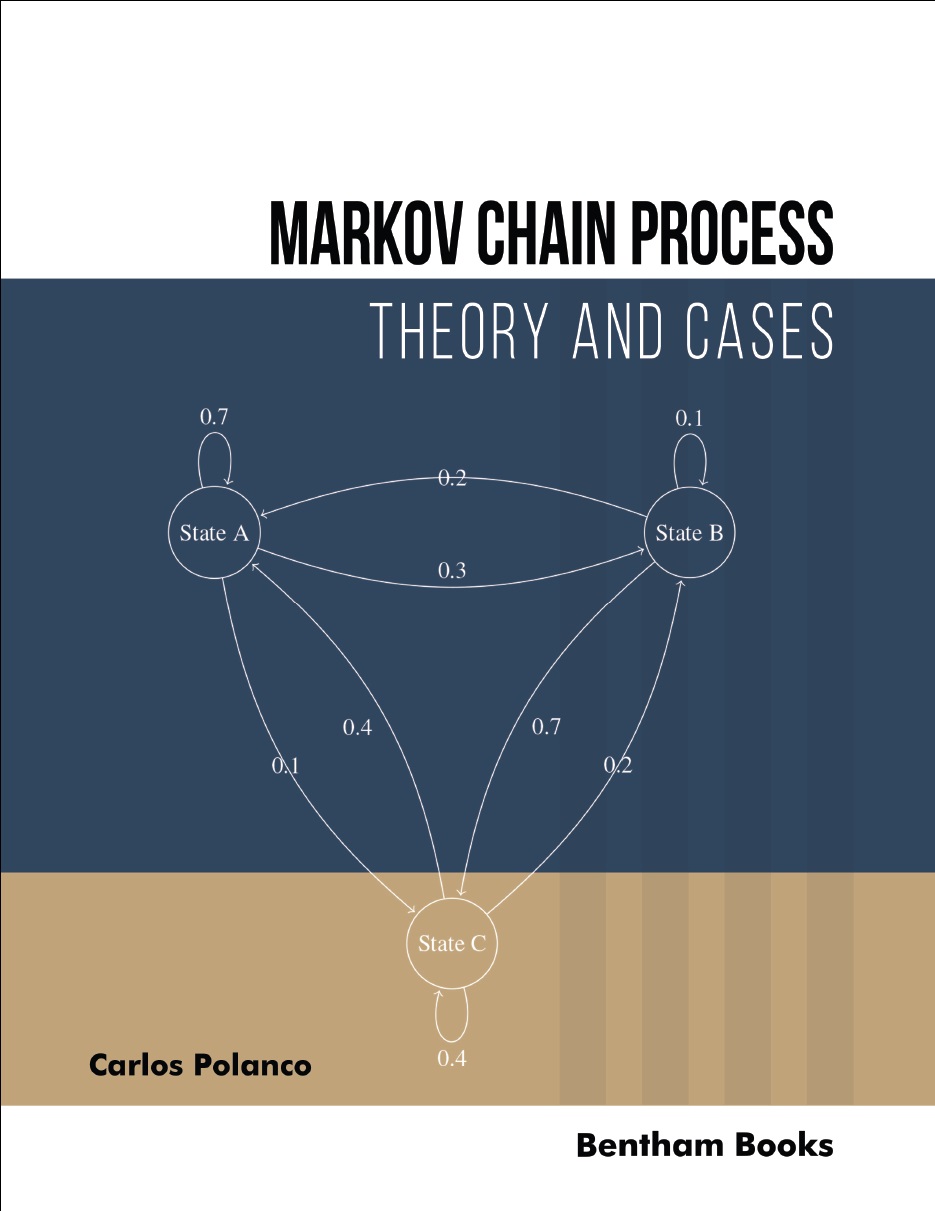

In this chapter, and from the historical introduction raised in the previous chapters, we introduce and exemplify all the components of a Markov Chain Process such as: initial state vector, Markov property (or Markov property), matrix of transition probabilities, and steady-state vector. A Markov Chain Process is formally defined and by way of categorization this process is divided into two types: Discrete-Time Markov Chain Process and Continuous-Time Markov Chain Process, which occurs as a result of observing whether the time between states in a random walk is discrete or continuous. Each of its components is exemplified, and analytically all the examples are solved.

Hardbound ISBN:

9789815080483

Ebook ISBN:

9789815080476

-

From This Site

/content/books/9789815080476.chap4dcterms_subject,pub_keyword-contentType:Journal -contentType:Figure -contentType:Table -contentType:SupplementaryData105

/content/books/9789815080476.chap4

dcterms_subject,pub_keyword

-contentType:Journal -contentType:Figure -contentType:Table -contentType:SupplementaryData

10

5

Chapter

content/books/9789815080476

Book

false

en